According to Vice’s Motherboard, an anonymous Microsoft contractor was able to offer recordings, that tend to vary in length from 5–10 seconds, however, they aren’t restricted to that, of people using Skype’s translation feature. To help Microsoft improve the feature’s capabilities, these contractors listen to what users have said and select from a list of possible translations or, in some cases, provide their own.

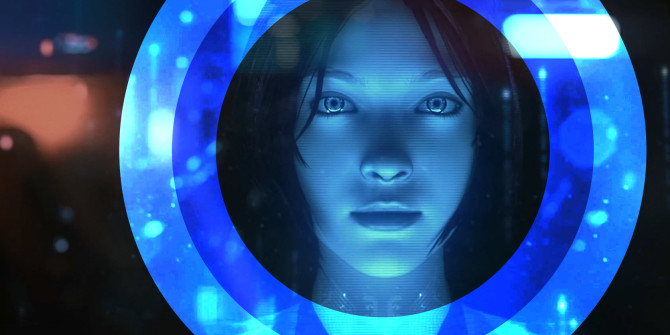

When asked regarding this setup, Microsoft representatives told Motherboard that the corporate makes these recordings accessible through a secure online portal and that it takes steps, not described, to remove any associated information that could be used to recognize a user after the fact. However, that doesn’t stop individuals from revealing data about themselves (like their address) when talking to a digital assistant like Cortana, and it doesn’t seem as if there’s any setup in place to prevent Microsoft’s contractors from analyzing that kind of spoken data.

According to a statement Microsoft provided to Motherboard:

“Microsoft collects voice data to provide and improve voice-enabled services like search, voice commands, dictation or translation services. We strive to be transparent about our collection and use of voice data to ensure customers can make informed choices about when and how their voice data is used. Microsoft gets customers’ permission before collecting and using their voice data. We also put in place several procedures designed to prioritize users’ privacy before sharing this data with our vendors, including de-identifying data, requiring non-disclosure agreements with vendors and their employees, and requiring that vendors meet the high privacy standards set out in European law. We continue to review the way we handle voice data to ensure we make options as clear as possible to customers and provide strong privacy protections.”

Microsoft’s practices are very common, and this is not the first report of this sort. Many apps that use our voices to supply convenient services need to be trained somehow, and most of the time computers can’t train themselves. There are generally humans involved somewhere, somehow along the way. Apple, Amazon, and Google faced identical criticisms after it was reported that each used human reviewers and transcribers to enhance their respective Siri, Alexa, and Google Assistant services, prompting concerns that their recordings of users’ requests and private conversation, some including confidential medical information, were being listened to by human ears, too.

Apple and Google temporarily suspended its contractor review and analysis practices. Amazon changed its policy to explicitly say that humans might review Alexa recordings and to provide a way for users to opt-out. The revelation concerning Skype, though, is particularly concerning because person-to-person Skype calls are way more likely to be sensitive than an Alexa or Siri request—though those clearly can be sensitive, too.